Image-based semantic change detection in 3D-point clouds using automatically sourced training data

Author: Marten Precht

Supervisors: Prof. Dr. Kristian Kersting, Dr. Cigdem Turan

Submission: November 2019

Abstract:

Understanding 3D-Scenes is a very useful but challenging task, and semantic instance labeling is a big part of it. It splits the space in a set of objects that belong to a fixed number of categories. This representation is a very natural one that is also easy to understand for humans. Gaining these representations bears a lot of challenges. The first one is the creation of the unlabeled representation of the space itself which is most often done using point clouds, meshes, or voxels and mainly depends on the available sensory data and the target application. Collecting high-quality data and converting it into good representations is its own challenge but not part of this work.

This work focuses on the task of labeling point clouds into different segments. It entails segmenting the entire point cloud into a set of disjunct point clouds and labeling each one of them. Labeling of point clouds has been an area of active research, and thanks to the presence of large and extensively annotated datasets, much progress has been made over the last years. However, one important limitation of the state-of-the-art is the reliance on said amounts of data and thus the limitation to general and popular class labels.

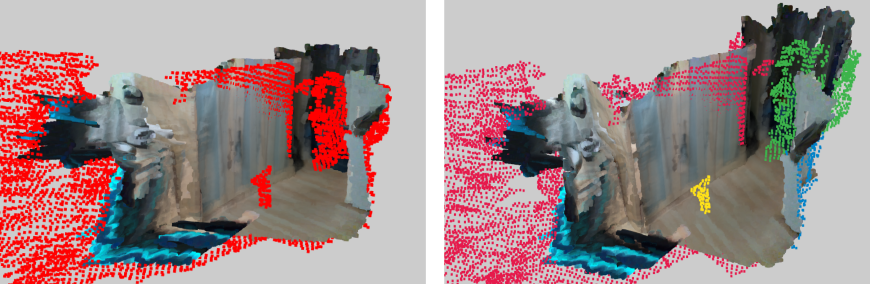

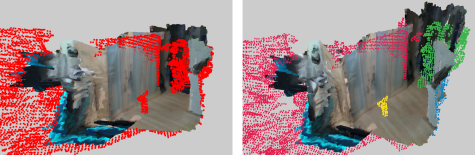

This thesis proposes a different algorithm that aims to solve a related task in a more scalable way: to semantically label the differences between two point clouds.

The difference between the point clouds provides already a useful segmentation, leaving us only with the task of labeling. The main advantage of the presented approach is, that it is not relying on a dataset of labeled point clouds but can instead source training data (consisting of images) purely based on the names of the classes and names of objects contained in them. This enables easier labelling of any objects of interest, whose size is large enough to be detected when comparing both point clouds, without the need for an expensive data collection and labeling process.

This can be a beneficial approach because in potential applications changes are often of greater interest than the static scene, for example when evaluating progress in a construction process.