TU Darmstadt’s Centre for Cognitive Science participated with its research group Artificial Intelligence and Machine Learning (Kristian Kersting). Furthermore the research group Serious Games (Stefan Göbel) was part of DeepSpaceBIM. The project partners have been M.O.S.S. Computer Graphic Systems, Robotic Eyes, DMT, Steinmann Kauer Consulting, Drees & Sommer, and Technical University of Darmstadt (TU Darmstadt).

Overview of AI-related research topics in DeepSpaceBIM:

We investigated the ways in which construction site personnel can be supported in their decision-making by assisting machine learning (ML) and artificial intelligence (AI) methods. It was also essential to assess the potential applicability beyond very narrowly defined technology demonstrations. Here, the data availability for the application of the respective ML/AI methods, and the potential R&D efforts for a resilient function of the envisaged functionalities played an important role.

The scenarios investigated were the automated detection of the construction status or progress (“Construction progress monitoring”) and the detection of specific (safety) critical situations in the interior of the building (Object recognition / “Inventory and security aspects”).

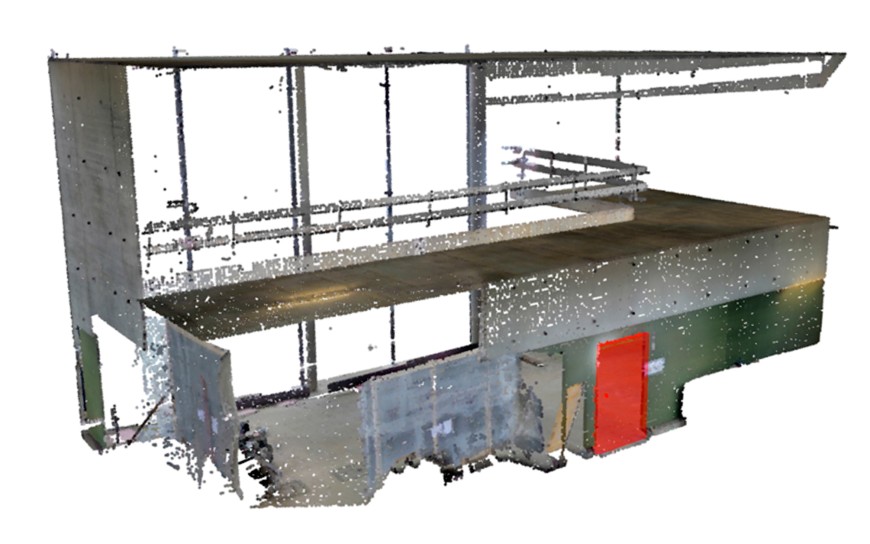

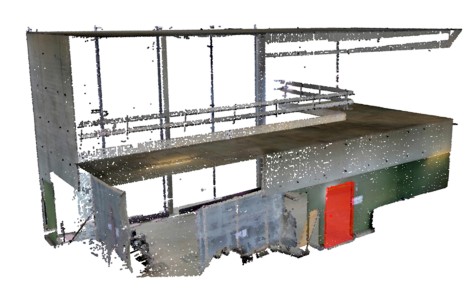

Initial tests confirmed that, due to their respective specific characteristics, point cloud data on the one hand (wide-area coverage) are better suited in particular for the analysis of construction progress, while on the other hand image/video data achieve better results for the live identification of objects. An investigation of the algorithmic combination of point clouds and image data for object recognition or progress analysis showed the general applicability, but did not yield any clear advantages in our application case.

“Construction progress monitoring”:

In monitoring the construction progress, the goal was the automated recognition of the built elements and the comparison with the plan data, e.g. from a BIM model. As an exemplary example, the project chose to detects walls and openings for doors and windows.

Our approach to detect wall openings works with 3D point cloud data. It uses image processing techniques to detect the density of the point clouds and applies optimisation algorithms to find relevant walls and matching wall openings in the given size range.

As well we used BIM data to represent the planned condition of a building to perform a progress analysis and the evaluation of location accuracy for the wall openings.

Object recognition / “Inventory and security aspects”:

This work was about the mobile, automated detection of certain objects/object classes with the aim of creating an inventory status. Special attention was paid in the project to the recognition of safety-relevant facts or objects (like the placement of fire extinguishers for instance) on the construction site.

A fast neural model that can be embedded in mobile applications was developed, which works on image data. The algorithm applied during the training process of the recognition model allows new object classes to be added to the model. To minimise training efforts we adapted the commonly used transfer learning technique where a pretrained model is finetuned with the images of the object classes of interest. The pre-trained model was provided by TensorFlow 1 Detection Model Zoo. This was fine-tuned with the images of a number of classes. The latest version of MobileNet, MobileNetV3-Large, was used in the framework for this purpose. We used the freely available datasets ImageNet and FireNet as training data.

The mobile application was integrated into the digital construction assistant architecture provided by project partners.

Contacts:

Dirk Balfanz

Project Details

| Project: | DeepSpaceBIM – Der digitale Bauassistent der Zukunft |

| Project partners: | M.O.S.S. Computer Grafik Systeme GmbH (Coordiator), Technical University of Darmstadt (TU Darmstadt), DMT GmbH & Co. KG, Drees & Sommer GmbH, Robotic Eyes GmbH, Steinmann Kauer Consulting GbR |

| Project duration: | October 2018 – August 2021 |

| Project funding: | 1.99 Mio EUR (joint project) |

| Funded by: | German Federal Ministry of Transport and Digital Infrastructure (BMVI) |

| Grant no.: | 19F2057E (TU Darmstadt) |

| Website: | https://www.bmvi.de/SharedDocs/DE/Artikel/DG/mfund-projekte/deepspacebim.html |

| Final Report | Download (opens in new tab) |