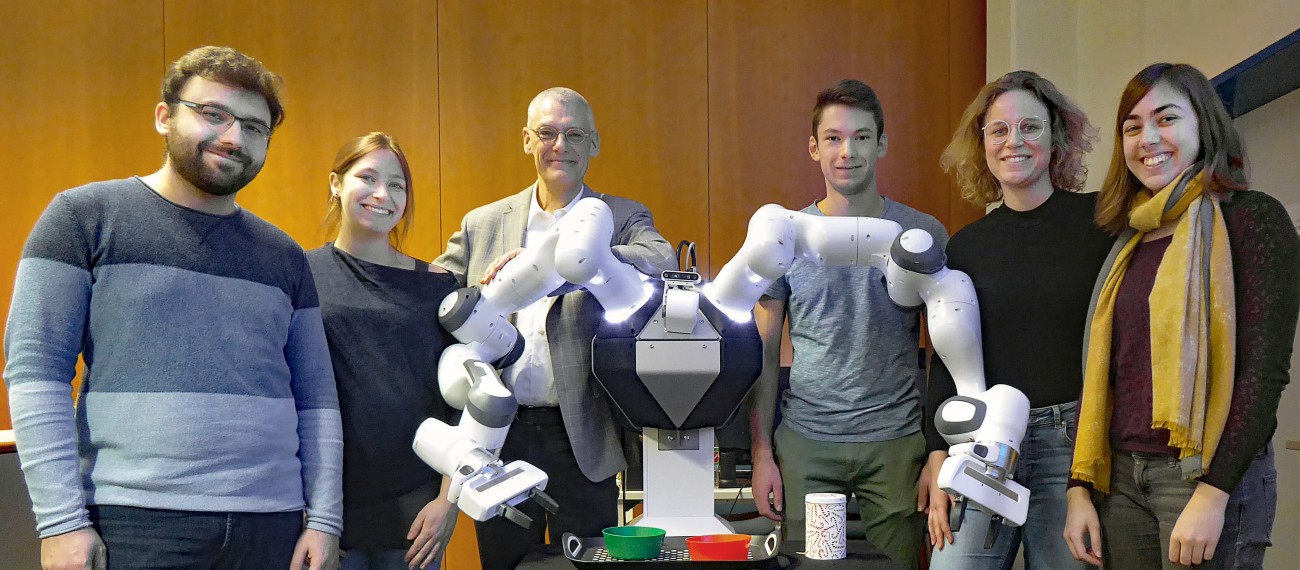

TU Darmstadt’s Centre for Cognitive Science participates with its research groups Psychology of Information Processing (Constantin Rothkopf) and Intelligent Autonomous Systems (Jan Peters). The project consortium comprises robotics company Franka Emika, Technical University of Munich (TU München), Rosenheim University of Applied Sciences (TH Rosenheim), and Technical University of Darmstadt (TU Darmstadt).

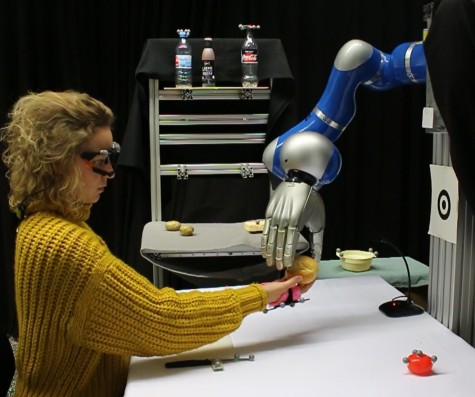

Intention Recognition. One crucial project objective is intuitive communication between elderly people and assistive robots. In order to develop a robot assistant that is really helpful in everyday life activities effortless communication between human and robot is indispensable.

Therefore, a central aspect of our work in KoBo34 is research of automated intention recognition in order to support robotic “understanding” of human behaviour. Since humans express intentions through different modalities such as hand gestures, gaze directions or speech, it is reasonable to also exploit the data of different modalities for automatically recognizing human intentions. Particularly, this can reduce the uncertainty over the intention to be predicted. Thus, one of the goals of the KoBo34 project is to reduce this uncertainty by combining information from different modalities (Trick et al., 2019).

Interactive Learning. Another core objective of the project is continuous improvement of the interaction by enabling the robot to learn from human input. Here, we work on learning interactive skills by demonstration.

A skill library containing robot movements as components of complex interactive tasks can be taught by kinesthetic teaching. However, many learning algorithms require the number of skills to be taught beforehand. For additional skills the whole library needs to be retrained. In KoBo34 we developed a new approach allowing open-ended learning of a collaborative skill library. This approach facilitates to iteratively refine existing skills and to add new ones without such retraining (Koert et al., 2018; Koert, Trick, et al., 2020).

While the previously discussed work focused on learning new skills we also worked on methods to adapt the robot’s movements online according to human movements, e.g. to avoid collisions. We developed two different modes of adaptation, spatial deformation of the respective robot trajectories and temporal scaling. Both modes were evaluated and compared on naïve human subjects (Koert et al., 2019).

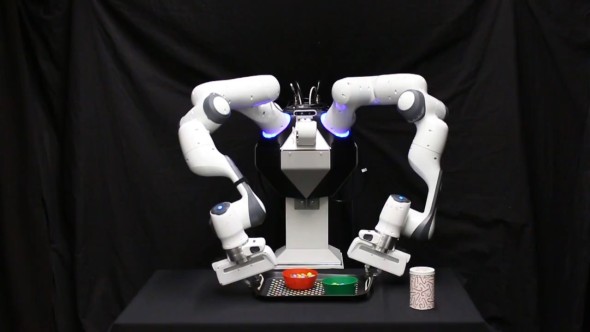

All these approaches regarding skill learning by imitation learning, adaptation of robot trajectories to human movements and improvement of robot’s skills to fit the human’s needs should be applicable to assistive tasks until the project’s end. One example task, preparing a tray of snacks for being served in an elderly home, is demonstrated here:

Contacts:

Project Details

| Project: | KoBo34 – Intuitive Interaktion mit kooperativen Assistenzrobotern für das 3. und 4. Lebensalter |

| Project partners: | Franka Emika GmbH (Coordinator), Technical University of Darmstadt (TU Darmstadt), Technical University of Munich (TU München), Rosenheim University of Applied Sciences (TH Rosenheim) |

| Project duration: | July 2018 – December 2021 |

| Project funding: | 1.94 Mio EUR (joint project) |

| Funded by: | German Federal Ministry of Education and Research (BMBF) |

| Grant no.: | 16SV7984 (TU Darmstadt) |

| Links |

Official Project Page Official Project Results Overview |

| Final Report | Download (opens in new tab) |