Surgeon from a Distance

TU researchers develop AI for operations from a distance

2022/07/05 by Boris Hänßler

Surgical robots require highly-qualified assistants – of which there is a worldwide shortage. Artificial intelligence developed at TU Darmstadt is intended to enable remote operations without them.

A few years ago, a report by the Lancet Commission on Global Surgery appeared showing that in high-income countries, there are on average about 71 surgical specialists for every 100,000 people. This compares with 24 in middle-income and only one in low-income countries. This means that more than two billion people receive no surgical care. This is not only a problem of global health: there is already a shortage in rural areas in Germany. The corona pandemic has further exacerbated the problem. Surgical training has been neglected because of the overload on the health infrastructure. The effects will be evident in a few years' time.

So-called surgical robots such as “DaVinci” could help to counteract this, but they require highly-qualified assistants. In these systems, a surgeon sits at a console that is almost completely separate from the robot. There are usually two trained assistants present who ensure that the required instruments reach the robot's hands or that the endoscope is positioned correctly for an intact view.

Our aim for the future is to provide surgical care for people anywhere who are in need – so they do not have to travel to conurbations, which is also a difficult logistical problem.

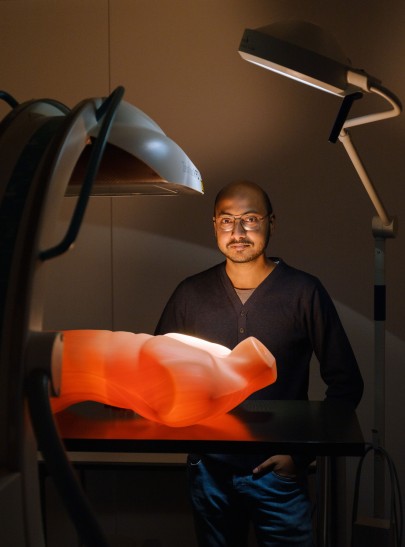

Dr. Anirban Mukhopadhyay, Director of the Independent Junior Research Group MEC-Lab

Surgery as a complex process

“We can imagine that with today's network bandwidth, the console could easily be positioned anywhere in the world, no matter where,” says computer scientist Anirban Mukhopadhyay of the Junior Research Group Medical and Environmental Computing (MEC-Lab) at TU Darmstadt “And if the bandwidth is stable enough, it is also possible to operate remotely. But where will we find these trained surgical assistants who are on-site for the procedure and will help?” So the researchers working with Mukhopadhyay are developing artificial intelligence that is able to take over these tasks in remote and rural areas or to support less qualified personnel.

Previous research in the field of artificial intelligence, in particular in image analysis, is not sufficient for this. For example, in order to to analyse a surgical workflow, the classical methods from computer vision and deep learning algorithms are adapted with minimal changes so they can use the surgical image data. In particular, the pixels of the videos are considered in order to make a classification. But this doesn't do justice to surgery. It's a complex process. It requires a lot of communication, because changes are constantly occurring in the operating theatre. Plus there is a lack of data that can be used to train artificial intelligence. Data sets in the five- or six-digit range are often used for image analysis, but they are not available during operations. You'll never get lots of examples in surgery because no two operations are completely the same.

“We've looked at how we can start with shapes in the image instead of pixels,” says Mukhopadhyay. “Surgeons are interested in the anatomical shape, the elasticity of these different shapes, and how to operate, what we can touch and what we shouldn't touch. So we tried to incorporate these ideas in the deep learning algorithms.” Shapes only occur with a specific topology or geometry. If an AI can recognise these aspects, then it will require less data for learning.

Example of cochlear implants

One example is cochlear implants, hearing prostheses that are inserted in the inner ear. The prostheses consist of two components, the actual implant and a speech processor that is worn behind the ear like a hearing aid. It contains a microphone that picks up acoustic signals. These are converted into electrical signal patterns in the speech processor and sent through the skin to the implant via a transmitter coil. The implant passes them on to electrodes that stimulate the auditory nerves.

The researchers use preoperative computer tomography scans of the head to train artificial intelligence. These are made to plan operations – such as how to insert the probe and place the electrode in the cochlea. So the patient does not also have to be irradiated in order to create training data. Once the algorithms have found the upper, middle and lower parts of a nerve in these scans, the robot knows where to make the connection with the implant. This means the system does not have to take every pixel in the image into account, but only has to identify the shapes. The advantage of this is that fewer training images are required for deep learning. “We were able to show that our algorithms already work robustly with 15 to 20 examples,” says Mukhopadhyay.

Building on this idea of shape consistency, the researchers introduced supervised contrastive learning – in which the AI learns to segment surgical instruments from real laparoscopic abdominal surgery solely by viewing simulated images.

Unforeseen events

One difficult problem that remains is that events occur during an operation that can make even this image analysis difficult. For instance, the interaction between instrument and tissue can cause bleeding. This then changes the appearance of the scene, and it is also possible that blood may obscure the instrument. Plus tools are used that burn the tissue and cause smoke. Also, the camera may get too close to the instrument and cause reflections on the metal. The robot then has to identify what has happened.

Thanks to this research, it may in future be possible for surgeons to perform operations from their workplaces anywhere in the world. The entire operation is divided into small phases. Each robot would identify each stage and tell the surgeon how likely it is that the robot will be able to perform an action itself. If it is unable to do so, the surgeon then has the ability to take complete control. Robots and surgeons communicate and interact in a similar way to surgeons and assistants – and the patient is able to enjoy a level of medical care that easily compares with that offered in a specialist clinic.