Until a few years ago, intelligent systems such as robots and digital voice assistants had to be tailored towards narrow and specific tasks and contexts. Such systems needed to be programmed and fine tuned by experts. But, recent developments in artificial intelligence have led to a paradigm shift: instead of explicitly representing knowledge about all information processing steps at time of development, machines are endowed with the ability to learn. With the help of machine learning it is possible to leverage large amounts of data samples, which hopefully transfer to new situations via pattern matching. Groundbreaking achievements in performance have been obtained over the last years with deep neural networks, whose functionality is inspired by the structure of the human brain. A large number of artificial neurons interconnected and organized in layers process input data under large computational costs. Although experts understand the inner working of such systems, as they have designed the learning algorithms, often they are not able to explain or predict the system’s intelligent behavior due to its complexity. Such systems end up as blackboxes raising the question of how such systems’ decisions can be understood and trusted.

WhiteBox – explainable models for human and artificial intelligence

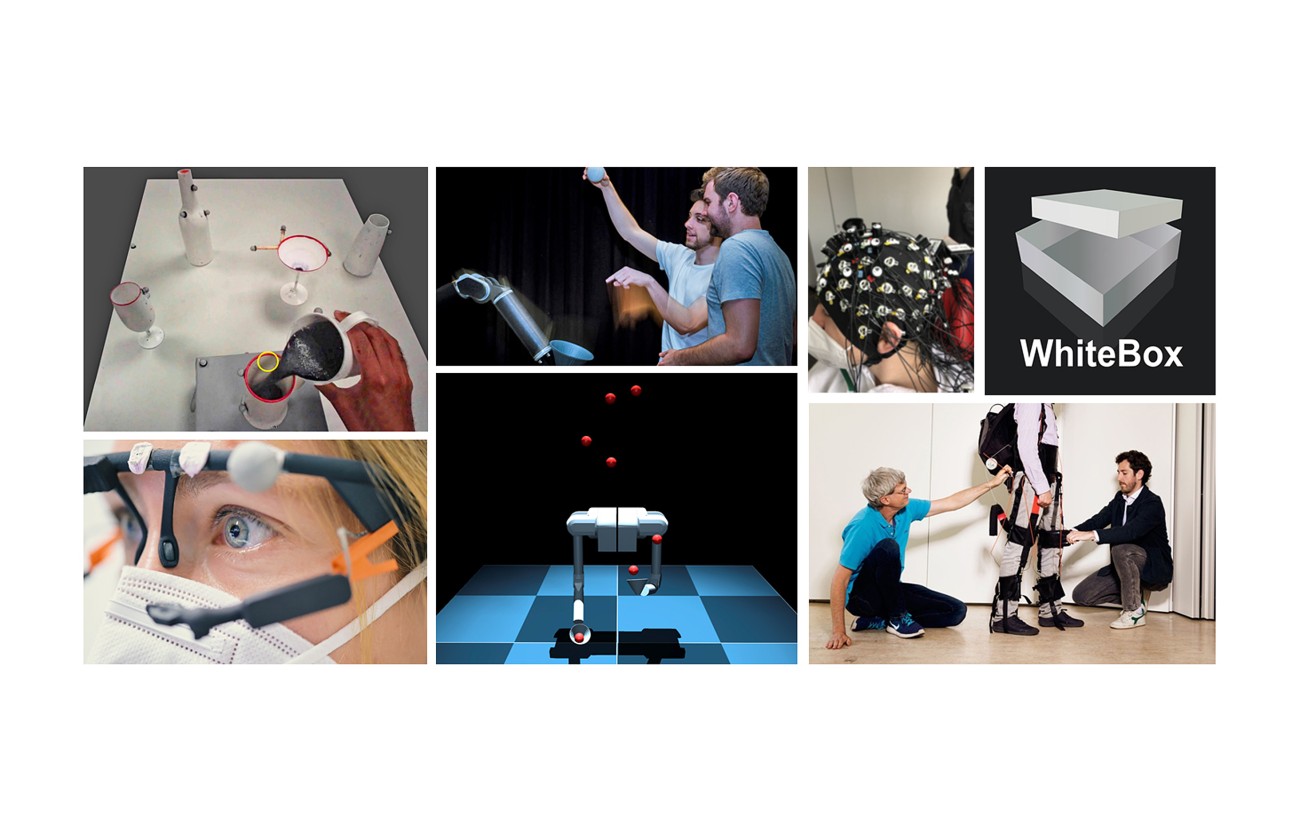

The LOEWE Research Cluster WhiteBox is aimed at developing methods at the intersection between Cognitive Science and AI to make human and artificial intelligence more understandable

Project Introduction

Our basic hypothesis is that explaining an artificial intelligence system may not be fundamentally different from the task of explaining intelligent goal-directed behavior in humans. Behavior of a biological agent is also based on the information processing of a large number of neurons within brains and acquired experience. But, an explanation based on a complete wiring diagram of the brain and all its interactions with its environment may not provide an understandable explanation. Instead, explanations of intelligent behavior need to reside at a computationally more abstract level: they need to be cognitive explanations. Such explanations are developed in computational cognitive science. Thus, WhiteBox aims at transforming blackbox models into developing whitebox models through cognitive explanations that are interpretable and understandable.

Following our basic assumption, we will systematically develop and compare whitebox and blackbox models for artificial intelligence and human behavior. In order to quantify the differences between these models, we will not only develop novel blackbox and whitebox models, but also generate methods for the quantitative and interpretable comparison between these models. Particularly, we will develop new methodologies to generate explanations automatically by means of AI. As an example, deep blackbox models comprise deep neural networks whereas whitebox models can be probabilistic generative models with explicit and interpretable latent variables. Application of these techniques to intelligent goal directed human behavior will provide better computational explanations of human intelligent behavior as well as allow to transfer human level behavior to machines.

News

-

![]() Picture: Markus Scholz für die Leopoldina

Picture: Markus Scholz für die Leopoldina![]() Picture: Markus Scholz für die Leopoldina

Picture: Markus Scholz für die Leopoldina„Knowledge versus Opinion“ @ Leopoldina

November 28, 2025

Strengthening science and democracy from within in a playful way: ProLOEWE with its science simulation game „Knowledge versus Opinion“ („Wissen versus Meinung“) will be visiting the Leopoldina in Halle on November 28, 2025.

-

![]() Picture: Fabian Wolf

Picture: Fabian Wolf![]() Picture: Fabian Wolf

Picture: Fabian Wolf„Zwei Wissenschaftler im Tiefenrausch“

October 31, 2025

ProLOEWE interview with WhiteBox speakers Constantin Rothkopf and Kristian Kersting

-

![]() Picture: 2025 TU Darmstadt

Picture: 2025 TU Darmstadt![]() Picture: 2025 TU Darmstadt

Picture: 2025 TU DarmstadtWebAI Hackathon 2025

July 28, 2025

Innovative web applications with a new AI framework

From July 23 to 25, 2025, TU Darmstadt became a hub for creative web development: At the WebAI Hackathon, six teams developed functional web applications with integrated AI components in just three days.

Newsticker

December 31, 2025 – After five years of cutting-edge research at the intersection between Cognitive Science and AI, the LOEWE Research Cluster WhiteBox has been successfully completed.

At the beginning of 2026, the Clusters of Excellence EXC 3066 ‘The Adaptive Mind’ (TAM) and EXC 3057 ‘Reasonable Artificial Intelligence’ (RAI) will commence and continue this successful basic research in an expanded framework.

November 28, 2025 – „Knowledge versus Opinion“. ProLOEWE visits the German National Academy of Sciences and presents simulation game on artificial intelligence, co-developed by WhiteBox. Learn more

October 2025 – „Zwei Wissenschaftler im Tiefenrausch“. ProLOEWE interview with WhiteBox speakers Constantin Rothkopf and Kristian Kersting. Learn more

October 23, 2025 – Congratulations! Claire Ott successfully defended her dissertation “Explaining Decisions: On Problem Solving and Analogical Reasoning in Human-Centered Explainable AI”. The thesis was supervised by Prof. Dr. Frank Jäkel and Prof. Dr. Kai-Uwe Kühnberger. Learn more

October 20, 2025 – Congratulations! Omid Mohseni successfully defended his dissertation “Synthesis of Legged Locomotion in Humans and Machines Through Force Based Concerted”. The thesis was supervised by Prof. Dr. Mario Kupnik and Prof. Dr. André Seyfarth.

October 15, 2025 – Congratulations! Asghar Mahmoudi Khomami successfully defended his dissertation “The Design Optimization Platform: A Predictive Simulation-based and User-centered Design Framework for Assistive Wearable Devices”. The thesis was supervised by Prof. Dr.-Ing. Stephan Rinderknecht and Prof. Dr. André Seyfarth.

September 1-5, 2025 – The International Interdisciplinary Summer School for Computational Cognitive Science (IICCSSS) 2025 was held from September 1–5 at the Centre for Cognitive Science in Darmstadt with around 70 attending students. The program featured keynote talks, poster and paper sessions, lab tours, and a hands-on hackathon. WhiteBox opened the robot lab and the locomotion lab as part of the lab tours, showcasing its results and ongoing research. Learn more

July 23-25, 2025 – WebAI Hackathon 2025 – Innovative web applications with a new AI framework. Using a new framework, teams of students developed creative web applications with integrated AI components in just three days. Learn more

July 10, 2025 – As part of the international conference AMAM/LokoAssist 2025, WhiteBox presented results of its work in the workshop “WhiteBox – Whitebox Modeling of Human Movement” on July 10. Learn more

May 25, 2025 – The ProLOEWE simulation game “Knowledge vs. Opinion: Artificial Intelligence” („Wissen vs. Meinung: Künstliche Intelligenz“) was honored at the Open Campus Day as part of the xchange Prize 2025 awarded by TU Darmstadt in the Knowledge Transfer & Science Communication category. Learn more

May 22, 2025 – A major success for Technical University of Darmstadt and the Centre for Cognitive Science: The Excellence Commission in the competition for the prestigious Excellence Strategy of the German federal and state governments selected the proposals ‘The Adaptive Mind’ (TAM) and ‘Reasonable Artificial Intelligence’ (RAI) from the fields of cognitive science and artificial intelligence for funding. Learn more

May 2025 – The Excellence Strategy is in final sprint. The decision on the funding of the Clusters of Excellence will be made in May 2025; the actual funding of the selected projects will then start on January 1, 2026 for a period of seven years. The preparation of the Hessian excellence applications has benefited greatly from the funding provided by the state of Hesse. Learn more

April 14, 2025 – Congratulations! Matthias Schultheis successfully defended his dissertation “Inverse Reinforcement Learning for Human Decision-Making Under Uncertainty”. The thesis was supervised by Prof. Dr. Heinz Koeppl and Prof. Constantin A. Rothkopf, Ph.D. Learn more

February 5, 2025 – At the invitation of WhiteBox and the Centre for Cognitive Science, David Franklin (Technical University of Munich) presented his work on “Computational Mechanisms of Human Sensorimotor Adaptation” in Darmstadt today, and discussed our scientific approaches.

November 20, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Anna Hughes (University of Essex) presented her work on “Hide and seek: the psychology of camouflage and motion confusion” in Darmstadt today, and discussed our scientific approaches.

November 6, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Mate Lengyel (University of Cambridge) presented his work on “Normative models of cortical dynamics” in Darmstadt today, and discussed our scientific approaches.

October 23, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Leonie Oostwoud Wijdenes (Radboud University Nijmegen) presented her work on “Variability in sensorimotor control” in Darmstadt today, and discussed our scientific approaches.

October 2024 – The WhiteBox research team met from October 7 to 11 at Laacher See in the Eifel for their third retreat. The main topics were interdisciplinary scientific exchange and discussion of the current status of the work.

July 17, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Jozsef Fiser (Central European University, Vienna, Austria) presented his work on “Complex perceptual decision making processes in humans” in Darmstadt today, and discussed our scientific approaches.

July 15, 2024 – WhiteBox is engaged in the science simulation game “Knowledge versus Opinion” at schools in the federal state of Hesse. The simulation game introduces pupils to the importance of science and democracy. Learn more

July 10, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Ralf Haefner (University of Rochester) presented his work on “Causal inference during motion perception, and its neural basis” in Darmstadt today, and discussed our scientific approaches.

June 2024 – From 13-14 June 2024 the 4th Movement Academy took place in Bensheim with over 50 participants, which was mainly organized by the DFG RTG LokoAssist. The aim of the Movement Academy is to create a unique learning space for movement experts and people interested in movements, national and international, that come from a variety of backgrounds including arts, sports, medicine, therapy and science. The conference was also supported by the Center for Cognitive Science and due to the involved topics by the WhiteBox research cluster, among others. Learn more

June 12, 2024 – At the invitation of WhiteBox and the Centre for Cognitive Science, Maria Eckstein (Google DeepMind) presented her work on “Hybrid Modeling with Artificial Neural Networks Reveals how Memory Shapes Human Reward-Guided Learning” in Darmstadt today, and discussed our scientific approaches.

April 25, 2024 – WhiteBox supported this year’s Girls' Day, aimed at fostering curiosity and interest in cognitive science. The participants engaged in immersive sessions exploring various topics within the field. Learn more

April 2024 – WhiteBox supports the awareness event: “Uncovered Biases: The Power of Awareness in Dealing with Unconscious Bias” on 24th of April 2024. (The event is held in German only.) Learn more

January 2024 – In a publication in the renowned journal “Nature Human Behaviour”, WhiteBox researchers investigate the properties of behavioral economic theories automatically learned by artificial intelligence. The study highlights that cognitive science still cannot be easily automated by artificial intelligence and that a careful combination of theoretical reasoning, machine learning and data analysis is needed to understand and explain why human decisions are the way they are and deviate from the mathematical optimum. Read more (in German)

Project Details

- Project: WhiteBox – explainable models for human and artificial intelligence (Erklärbare Modelle für menschliche und künstliche Intelligenz)

- Project partners: Technical University of Darmstadt (TU Darmstadt)

- Project duration: January 2021 – December 2025

- Project funding: 4.7 Mio EUR

- Funded by: Hessian State Ministry of Higher Education, Research and the Arts

- Funding Line: LOEWE Research Cluster, Funding Round 13

- Funding ID: LOEWE/2/13/519/03/06.001(0010)/77